GallowGlass AI Labs

Skunk Works

About GallowGlass AI Labs

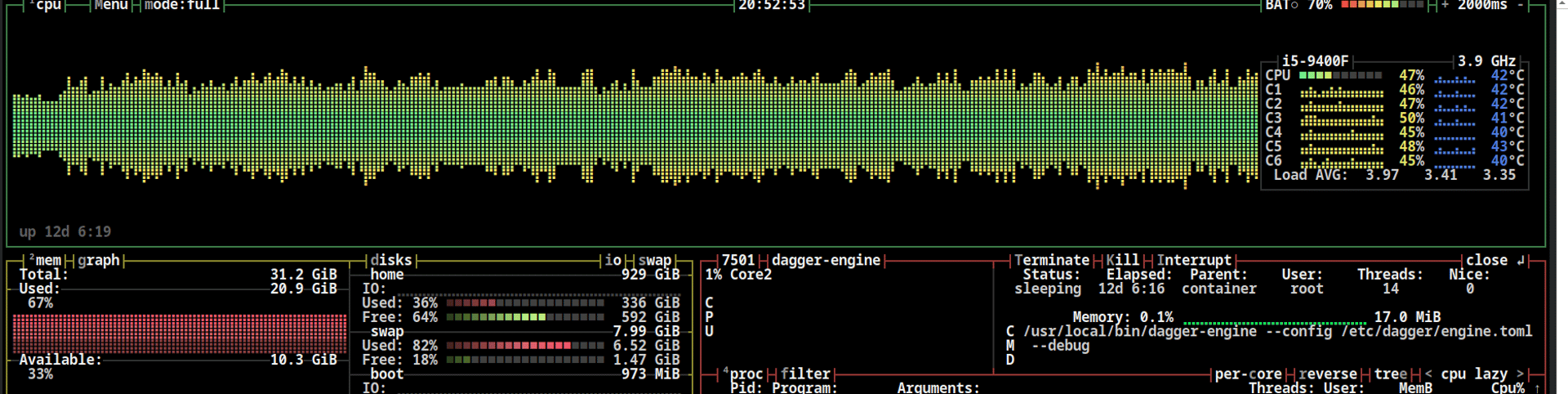

GallowGlass AI Labs is the Skunk Works where we test AI technologies mostly on a local custom built server named caped-crusader after my favorite superhero.

Caped-Crusader's configuration is shown in the title background picture above also has 2 SSD drives and 2 hybrid drives. One hybrid drive runs a MinIO server, a Amazon S3 compatible object storage system.

Notably, the box lacks a Nvidia GPU, well Nvidia Linux drivers were well you know...ask Linus. Caped-Crusader has had upgrades over the years as needed.

Caped-Crusader's operating system is Fedora 41, my Linux distro choice for years. Incidentally, AWS Linux is based on the Fedora upstream distribution. Given the dated hardware circa 2019, caped-crusader is quite plucky with AI applications. However, it can be laughably slow at times. (see post below)

AWS is used for development and testing for production systems.

Also, most distilled models and SmolLMs are also tested on a Raspberry Pi 5 with a Hailo AI module called Magellan containing a Neural Processing Unit (NPU). The elderly Nvidia Jetson Nano is also used for legacy hardware AI, impossible to get one's hands on the new Jetson Orin.

My open source development environment contains the usual suspects from the impossibly large open source ecosystem like Docker, Nginx, Apache, Postgres, Redis, and Minikube (K8) and more. If needed, LaTex is used for all documentation.

From the burgeoning open source AI universe, AI specific packages and frameworks include Ollama, LM Studio, OpenWeb UI, CrewAI, Qdrant, Langchain, GretelAI, Moonshot, Dioptra, Label-Studio, etc.

Naturally, I use the only real text editor in the world which is Vi/Vim (RIP Bram Moolenaar). By the way, if you use VS Code, install PlatformIO, see final paragraph, useful for hardware programming.

Does anyone still use Emacs and if so why? Just kidding, always use an editor of your choice including Emacs. One should be comfortable with their own tools.

As for micro controller/chip programming, 3D Design PlatformIO and PCB layout, caped-crusader is armed with PlatformIO, the Arduino IDE, FreeCad, KiCad and Fritzing.

DeepSeek-R1: The Lord Byron test

This is a (very) brief walk-through of the DeepSeek-R1 (hereafter referred to as dsr1) LLM. DeepSeek-RI is one of the new crop of reasoning models which use Chain of Thought (CoT).

Chain of thought (CoT) which mirrors human reasoning, in other words think it through, show us your thought process that was used to arrive at the answer. dsr1 CoT process is shown between the <think>CoT</think> XML tags and, the amount of self referential vocabulary is interesting.

So what is the Lord Byron test? Well every AI developer, engineer, hobbyist has their own favorite battery of tests to essentially measure model AI responses for veracity, accuracy, applicability and capability.

As an example the incomparable AL entrepreneur and enthusiast Matt Berman loves the four killers puzzle or is it the five killers puzzle? Answers to these questions and answers to more brain teasers test the model's response capabilities.

My Lord Byron test is the the usually first test for all my models, a couple questions about the great Romantic poet, Trust me, nothing scientific or sophisticated but fun.

Essentially, I first ask the model to tell me about Lord Byron.

Who is Lord Byron?

with the followup with a slight twist,

there is a connection between Lord Byron and computer programming?

Byron's only legitimate daughter was Ada Lovelace, considered the world's first programmer.

Both answers do contain errors with multiple comical errors in response and laugh out loud conclusions.

And the final question,

thanks but you made a crucial error can you figure it out?

At 3:32 mark, dsr1 comes up with preposterous and confusing thought patterns. Multiple errors, inclusion of strange alphabets and comical conclusions are scattered throughout the response. Eventually, the model does arrive at the correct answer?at the end?

*Oh, the entire test took an outlandish 11.47 minutes as caped-crusader struggled with the hefty model and to think this was an easy test.

As the result the walk through was compressed to 5:43. Trust me it was painful to watch.

I dread how long code generation would take.

References